Introduce basics of Markov models. Define terminology for Markov chains. Discuss properties of Markov chains. Show examples of Markov chain analysis. On-Off traffic model. Markov …

Transform a Process to a Markov Chain Sometimes a non-Markovian stochastic process can be transformed into a Markov chain by expanding the state space. Example: Suppose that the …

For this, we need to decide which parts of a given long sequence of letters is more likely to come from the “+” model, and which parts are more likely to come from the “–” model. This is done …

- [PPT]

Hidden Markov Models

Overview General Characteristics Simple Example Speech Recognition Andrei Markov Russian statistician (1856 – 1922) Studied temporal probability models Markov assumption Statet …

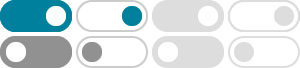

Transition Matrix To completely describe a Markov chain, we must specify the transition probabilities, pij = P(Xt+1=j | Xt=i) in a one-step transition matrix, P: Markov Chain Diagram …

Estimating Markov Models: Monte Carlo Simulation Instead of processing an entire cohort and applying probabilities to the cohort, simulate a large number (e.g., 10,000) cases proceeding …

Markov processes and Hidden Markov Models (HMMs) Instructor: Vincent Conitzer.

Markov Process Markov process is a simple stochastic process in which the distribution of future states depends only on the present state and not on how it arrived in the present state.

In HMMs, we see a sequence of observations and try to reason about the underlying state sequence. There are no actions involved. But what if we have to take an action at each step …

Markov: The system restarts itself at the instant of every transition. Fresh control decisions taken at the instant of transitions.